Feedster: How to filter news by goals

Ideas, wins, and pitfalls lay along the way of shaping the product out of a bold idea

How much do you spend reading the news daily? How much of this time do you feel was useful? Is there room for improving the quality of information you’re consuming? Or its relevance?

Feedster is specifically targeted towards this room. With the help of it, we aim to answer the question of how to extract the most value from the daily stream of updates while significantly reducing the time spent. GPT helps out here.

In a nutshell, the idea is to filter these information streams by given learning goals, e.g. you want to stay updated on the NodeJS ecosystem, you give a prompt and Feedster tests each information item coming against your goals list, let it come to you only if resonates.

The idea sounds awesome, but we struggle to shape a product out of it.

Initially, we wanted to create a feed-based app, but as MVP we ended up building a digest machine - you get your news daily as a filtered and summarized digest.

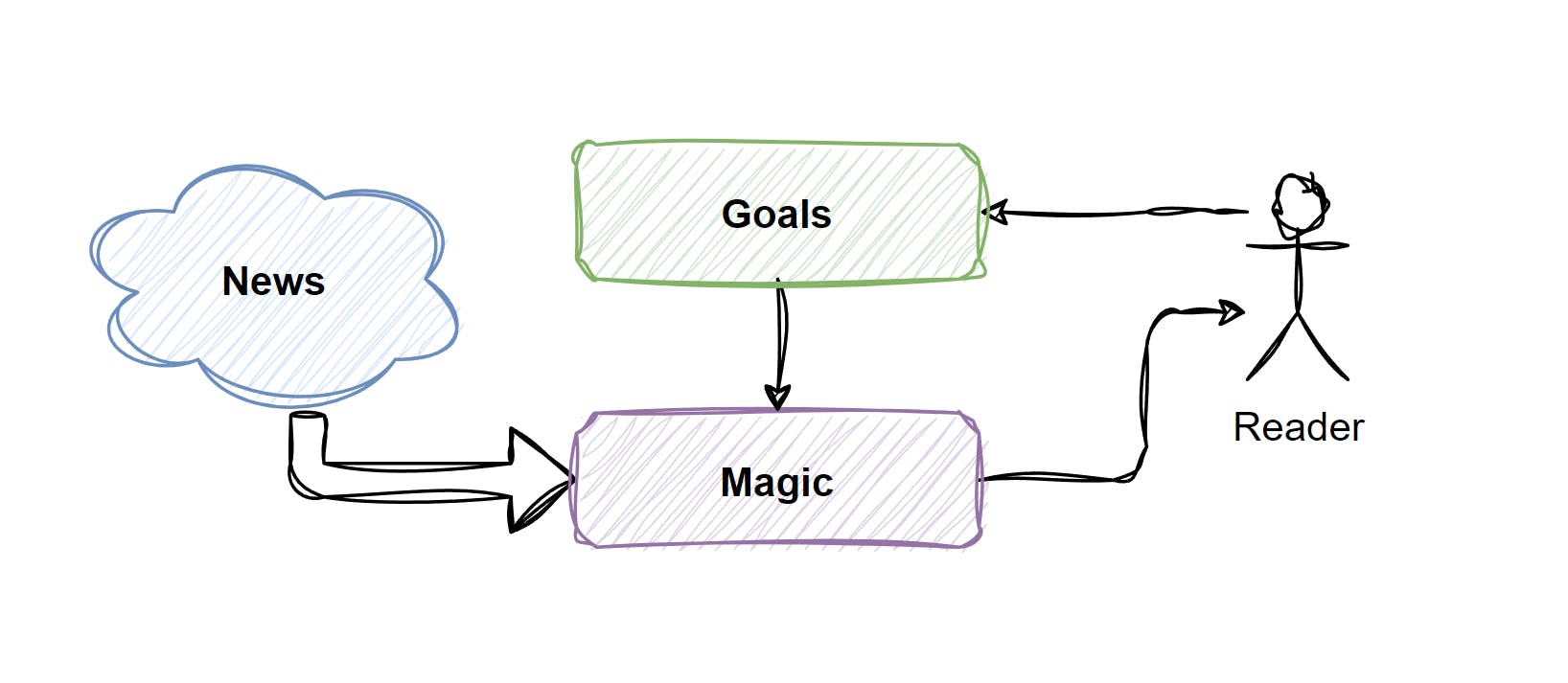

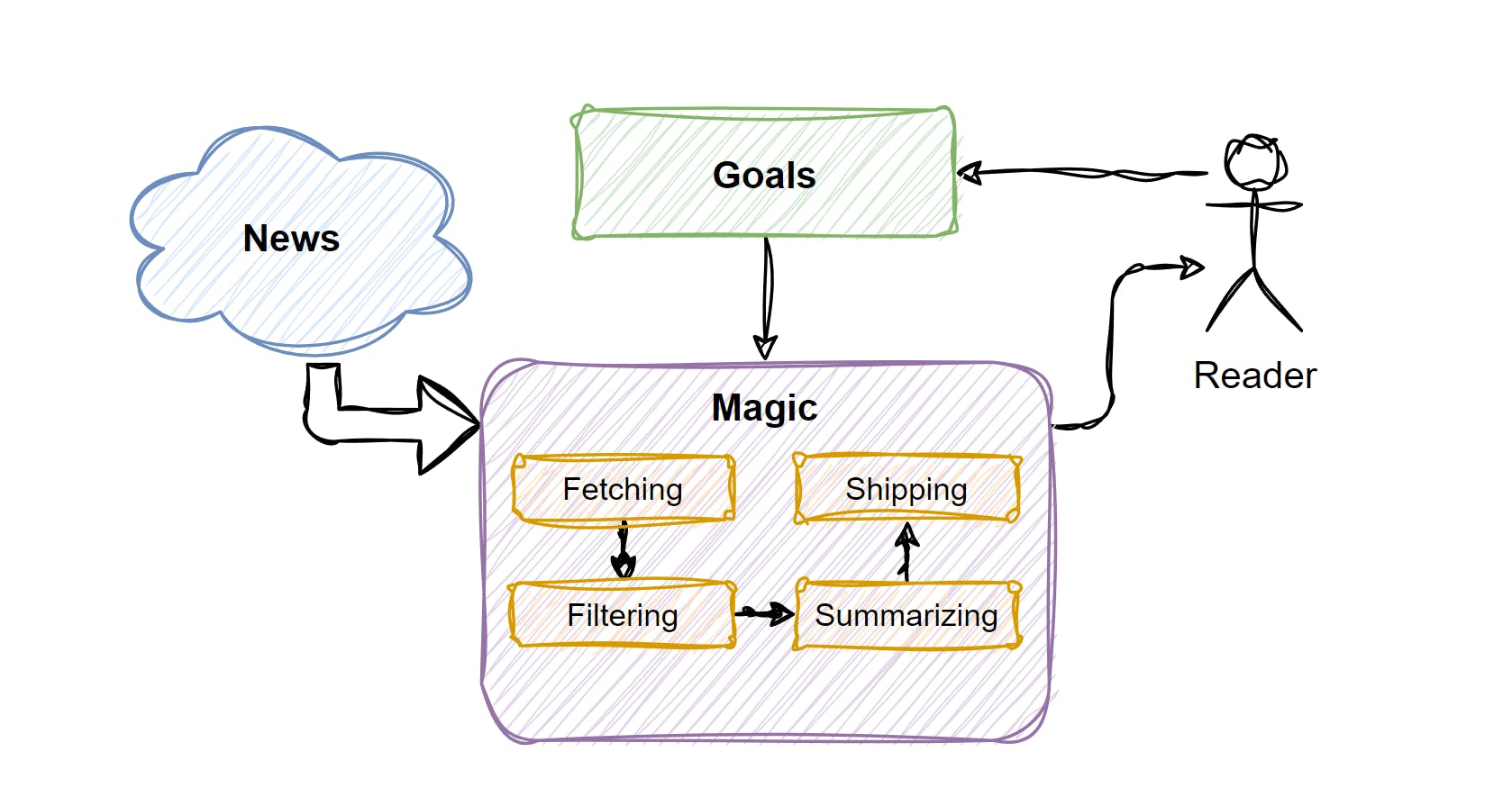

The whole data flow behind the daily digest could be separated into 4 steps:

Fetching data from sources

Test each piece of information against user-defined goals and filter out what does not resonate

Summarize the rest to a set of bullet points

Ship the 5-min-length digest to the user

Let’s break them down one by one.

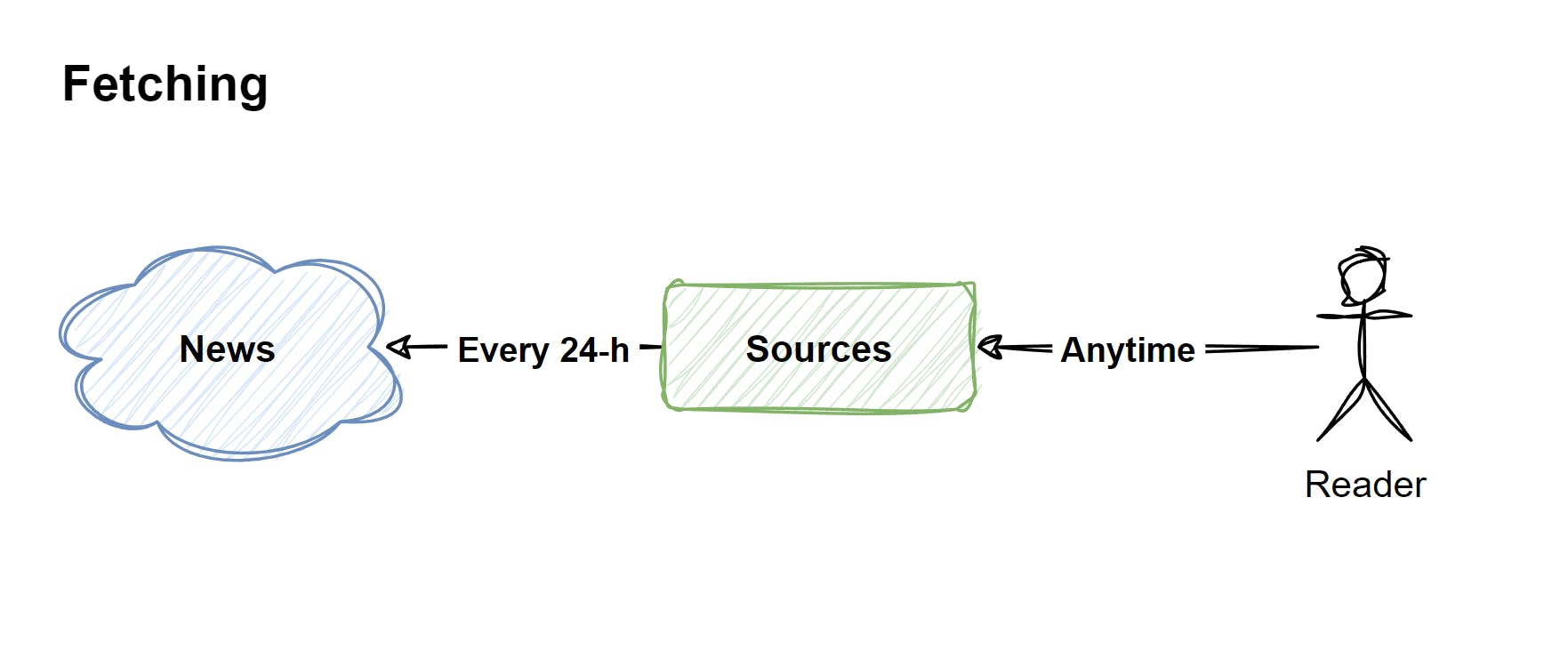

Fetching data from sources

The whole information journey starts with sources. We have introduced the 2 of them we use ourselves - RSS feeds and Gmail newsletters.

With Gmail, it's a bit tricky since you either need to introduce a separate email address or set up a separate label (like we did) to not mess up regular messages.

More sources are more than possible. The best case scenario here is to expose the universal API to connect any kind of source using No-code tools like Zapier. A lot of people would like to use more targeted marketing sources like Google Alerts, for instance, to watch over their competitors.

By default, we check the last 24-hour updates once a day from all connected sources. The hard thing to manage here is to balance the amount of information to get and to process - the less information we get, the less comprehensive digest we will have in the end, but on the other hand if we take too much, reading such a summary wouldn't be a 5-minute task as we want it to be.

We propose a win-win solution: process the entire dataset, rank it based on goal alignment, and only ship the most relevant 5-minute digest.

It would require more tokens but GPT is getting cheaper, right? :)

Filtering

The prompt engineering journey starts here.

When we get all the data in place, we need to test each piece of information against the user's goals, It sounds pretty simple, but when it comes to practice a lot of pitfalls are coming out.

Straight forward approach would be to just ask GPT whether it resonates or not against any news post, but it turned out that sometimes it gives wrong-yes and wrong-no because it doesn't understand how some topics can be connected to some information. We will elaborate on such discrepancies when we research them more, for now, one of our solutions is to ask GPT a scale (e.g. from 1 to 5) of matching, instead of just "yes" and "no", it turned out much more robust.

However, it's still missing out on good ones, and letting go of some non-relevant. The biggest problem we face right now is to give the algorithm the level of proficiency of the reader. For example, if my goal is to get updates about Javascript, and I'm a senior, I would not be happy having "how to write a for-loop" kind of articles in my inbox every day.

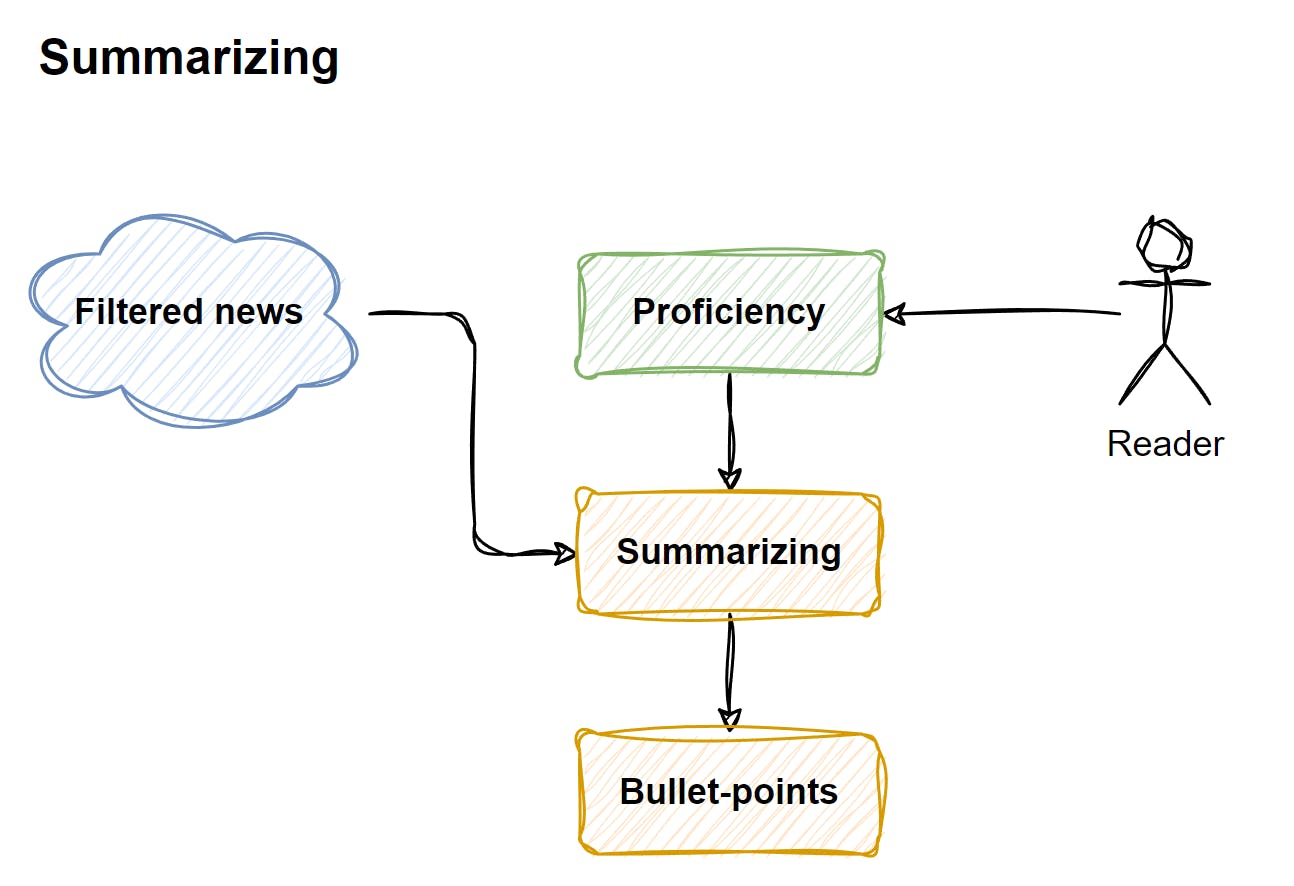

Summarizing

Okay, seems like fetching and filtering information aren't easy things to handle, but what could be wrong with summarization? Just ask them to cut all the water and transform the whole thing into bullet points.

Not so easy.

Once again, when attempting a straightforward approach, it begins to explain things that weren't intended. For example, our developer Kirill subscribed to Git updates, and in the summary from the last one, he has a couple of bullets explaining what Git is!

So the "proficiency" of the reader is popping out as a problem here as well. GPT is very kind to us and assumes that we need to get more context while reading about niche things as Git, but for a personalized news reader, that should know what you want to read is a bad thing.

Here's the prompt engineering comes along the way once again.

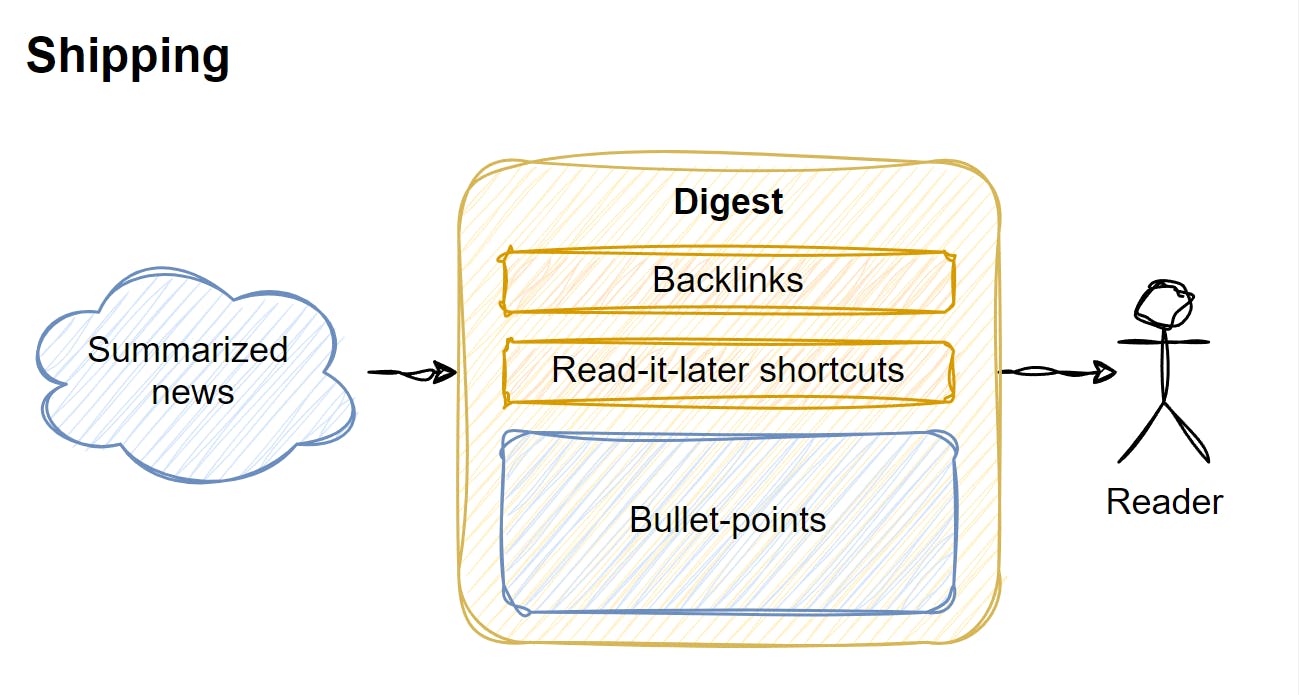

Forming the digest

Finally, we have our summary, all the backlinks, and goals that showed up as relevant, and we combine the whole thing into a digest you get in your inbox.

Here's one more pitfall I faced when setting up the thing for myself. I'm a Readwise user, and I want to process what I read and get parts that resonate in my library. With RSS the workflow is pretty easy

Place a backlink to digest

Go to the source

Save it to read-it-later app

Sounds simple, but there might be questions on how to save time using the summary, like going to the specific part of the article, instead of the need of saving the whole piece of text (that could be overwhelmingly huge) and reading it later seeking for the interesting parts

With newsletters the workflow is even more clumsy - if you want to save an interesting issue to read it later you probably need to forward them to a dedicated email or put a special label on it.

Any of these options are hard to anticipate from the start. And that’s why good product design can help us out.

What’s next?

What you observe is an early-stage product forming, even before we can go out and validate it. We have several areas of improvement:

Product design - a solution we have right now might be okay for innovators-engineers who are okay running things via CLI and experimenting to take out the best. Definitely not for everyone, that’s why we’re on a journey to turn the algorithm into a product.

Robustness - You’re not getting your digest because of OpenAI API timeout: that shouldn’t be the case. So far, we have a bunch of engineering challenges we still need to address

Prompt engineering - as with any GPT-based product, Feedster can be significantly improved by fine-tuning prompts based on user experience, we can see a huge room for improvement in the area

Customer discovery - it’s a pain point for any engineer who wants to run a startup: we want to build and don’t want to go out doing marketing. But talking to experts who can help out here can be a way out.

So if you are a designer, marketeer, or engineer obsessed with AI, and you see the bright future of goals-driven news consumption, feel free to reach out, let’s have a talk!